Sky lanterns have carried wishes into the dark for centuries. We wanted to see what that ritual might look like at planetary scale – not paper and flame, but metal, fire, and faith. Starship as lantern. Launch as offering. Humanity lifting light into the void.

This is a spot for SpaceX, made entirely with AI. Imagery generated in Midjourney, refined in Photoshop, animated through Kling, Minimax, Runway, and others – all managed through Flora's node-based pipeline. The script, voiceover, and music are AI-generated as well. What required traditional compositing was the craft: color correction, glow adjustments, layer builds, cleanup. AI gave us 75% of the image. We added the rest.

A passion project. One of our favorites.

The sky lantern is one of humanity's oldest rituals – releasing light into darkness, sending hope upward. We wanted to reimagine that gesture at the scale of spaceflight. Not fragile paper, but Starship. Not a single flame, but a civilization reaching beyond itself.

The spot follows lanterns rising from every corner of Earth – remote islands, frozen frontiers, mountain peaks, endless horizons – until they become something else entirely. Metal. Fire. A rocket ascending. The same human impulse, expressed through engineering.

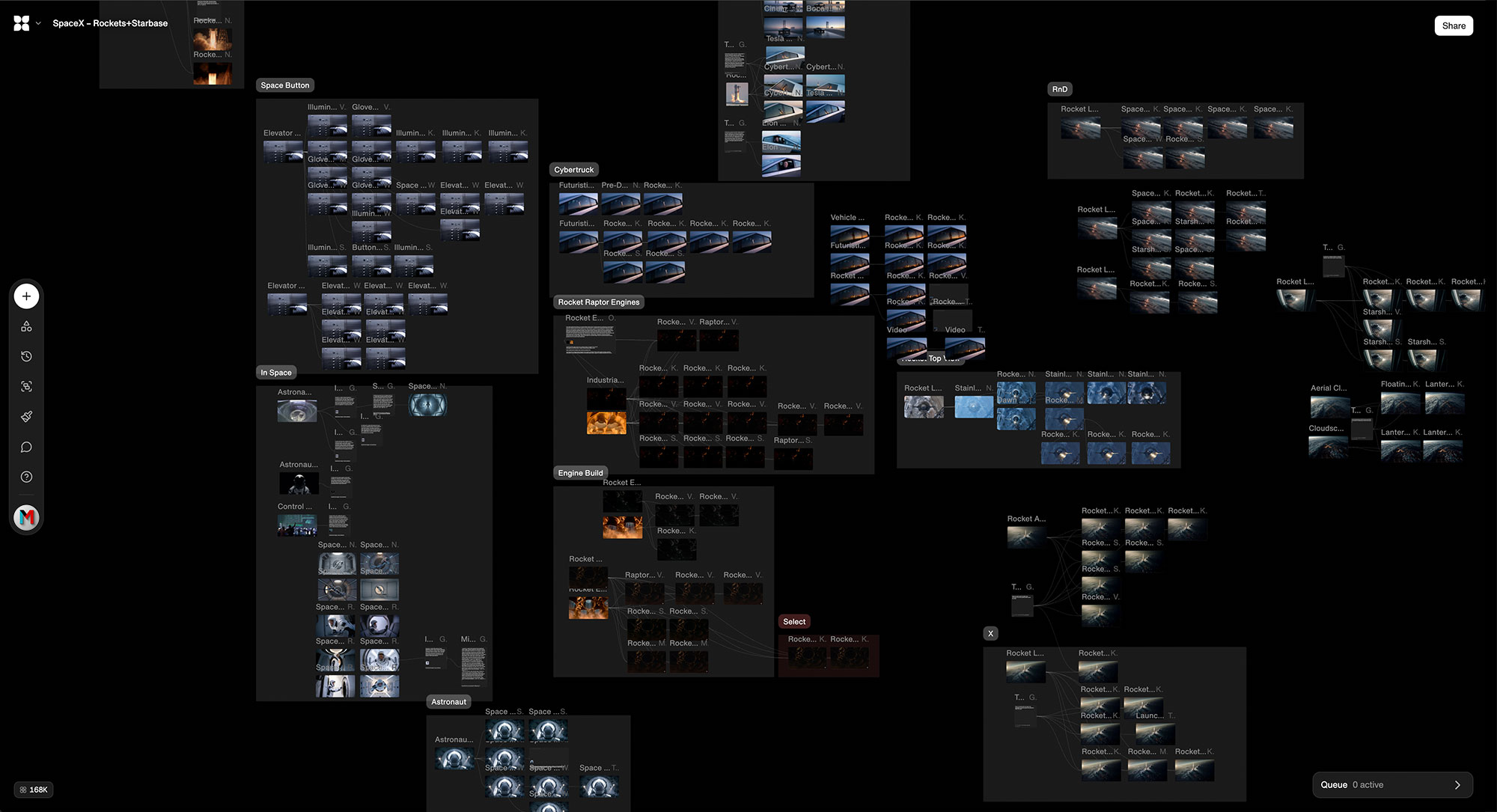

This was a fully AI-generated production, managed through Flora – a node-based pipeline that let us work across multiple models in a single workspace. Keyframes were generated in Midjourney, then brought into Photoshop for cleanup, color correction, and glow adjustments. The refined stills moved through Kling, Minimax, Runway, and other video models, with Flora orchestrating the workflow.

But AI only got us part of the way. The final shots required real compositing – the airplane window sequence, for instance, was built from separate layers: window frame, clouds, sky, lanterns, Starship. Each element generated independently, then assembled by hand. Color grading and cleanup continued through the end.

The script was written with AI assistance. The voiceover is AI. The music is AI. Even the Cybertruck reflection shot was generated through Grok. But the vision, the structure, the craft – that's still us.

A 45-second spot for SpaceX. A proof-of-concept for AI-assisted production. And a reminder of what we're actually doing when we launch rockets: carrying the light of one small world into the dark.

The entire production was managed through Flora – a node-based pipeline that let us work across multiple AI models in a single workspace. Keyframes flowed from Midjourney through Photoshop cleanup, into video models like Kling, Minimax, and Runway, with each step visible as connected nodes. It's the kind of visual workflow we know from years of VFX and compositing – just pointed at a new set of tools.